Revolutionizing Risk-Based Alerting

What is Risk-Based Alerting?

What is risk-based alerting (RBA)?

Risk-based alerting (RBA) is a strategy that uses data analysis and prioritization to issue alerts or notifications when potential risks reach certain predefined levels. The severity and potential impact of an alert is assessed and an alert indicating a potential data breach or critical system compromise is assigned a higher risk level than an alert related to an isolated incident with low impact. Once the detected events have been assigned risk scores and amplification factors, the system can prioritize alerts based on the associated risk levels. Higher risk events, with a higher priority, will be escalated to security analysts for immediate action or to automated response mechanisms.

This approach is considered more efficient and effective than generating alerts for every possible event and helps companies to manage and respond to risks in a more targeted way. Over time, risk-based alerting should evolve and improve based on an organization’s experiences and adaptations. This adaptability should ensure that the security strategy remains effective in the face of the changing threat landscape. Finally, it is about money too: by targeting high-risk areas and threats, companies can use their security budget more efficiently and ensure that investments are made where they are needed most.

Optimized RBA: how NDR uses AI & context to prioritize threats

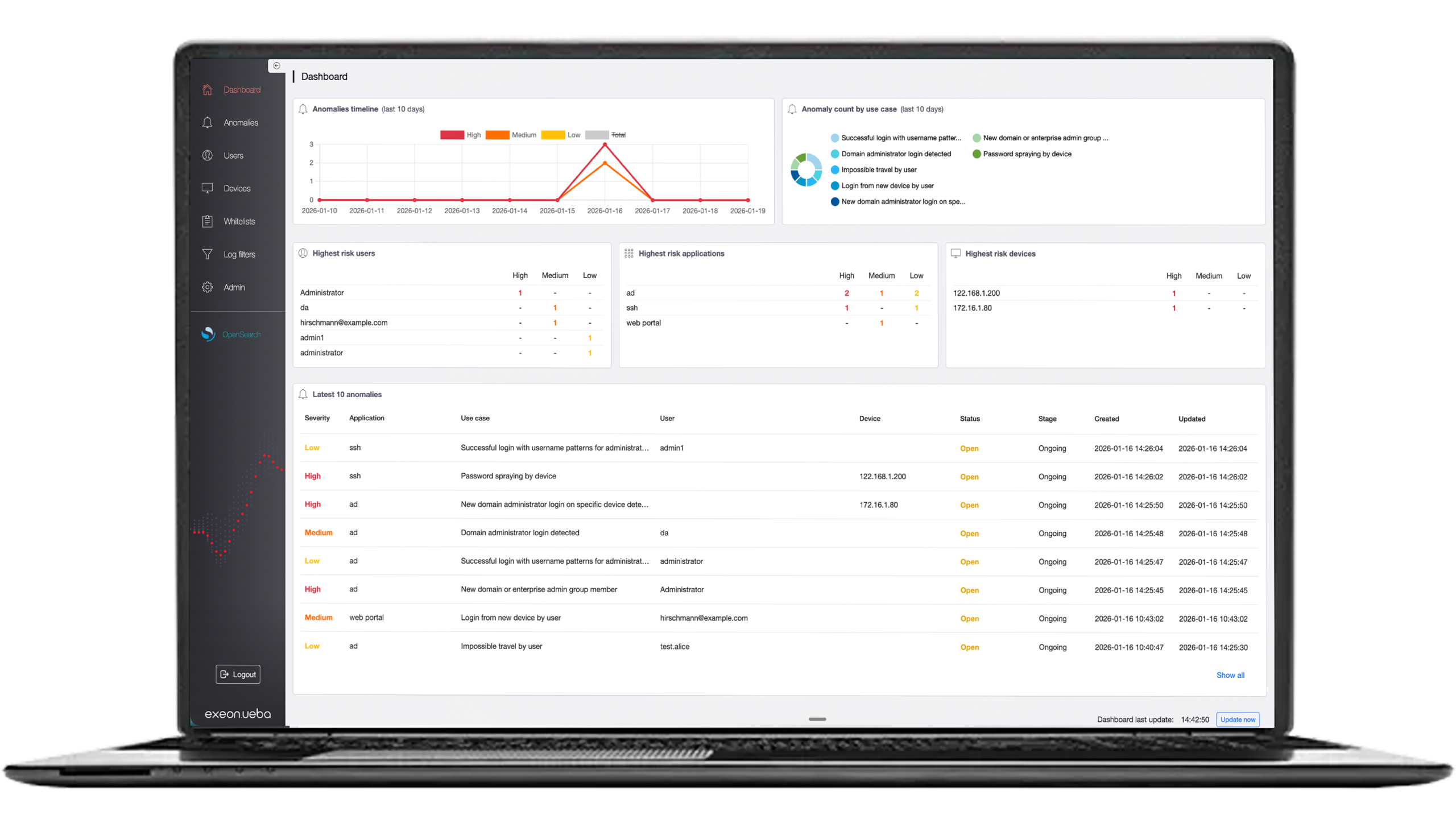

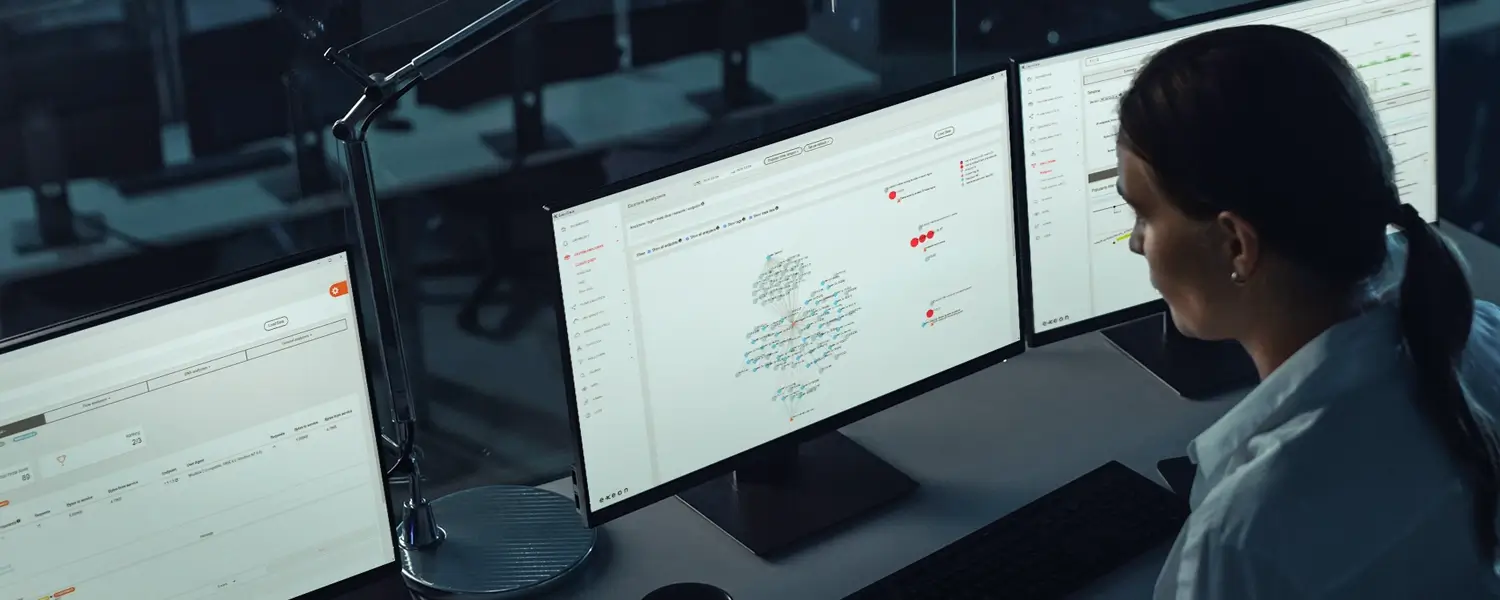

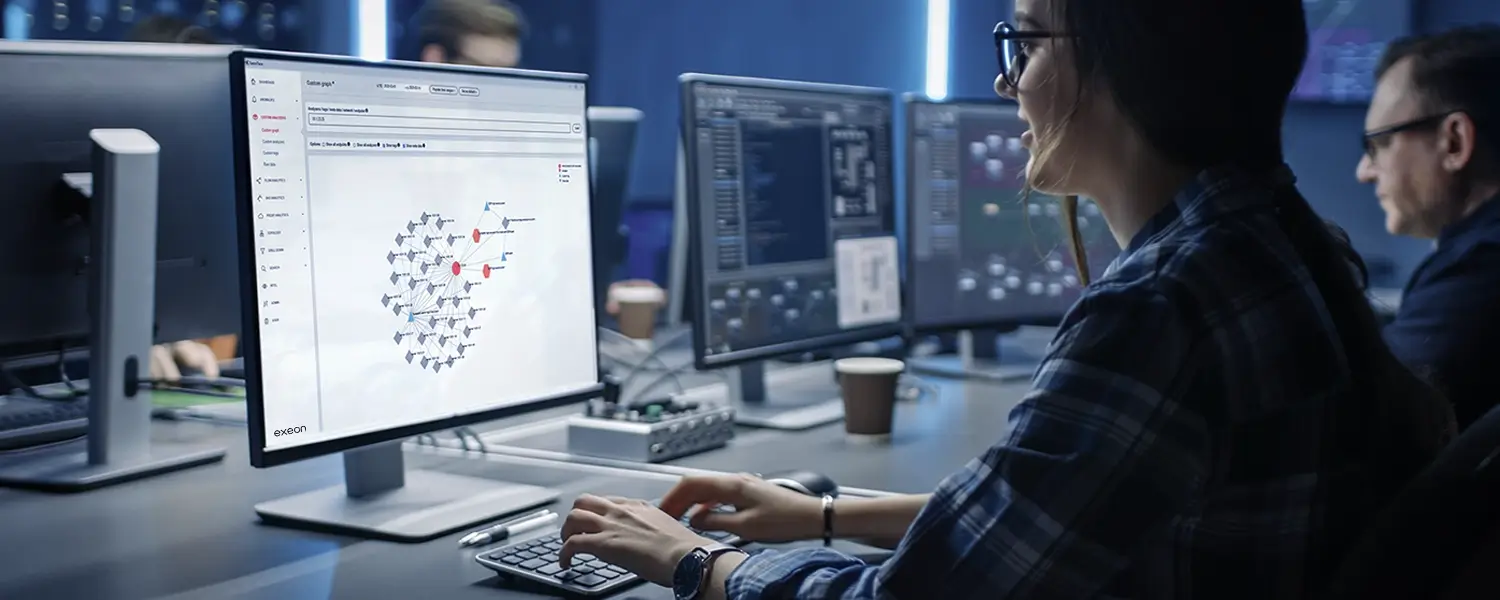

Network Detection and Response (NDR) enables organizations to use continuous monitoring, machine learning and contextual intelligence to deliver advanced threat scores, potentially weighted on a company’s own risk assumptions, and hence to prioritize and categorize alerts according to the perceived level of risk, improving threat identification and response.

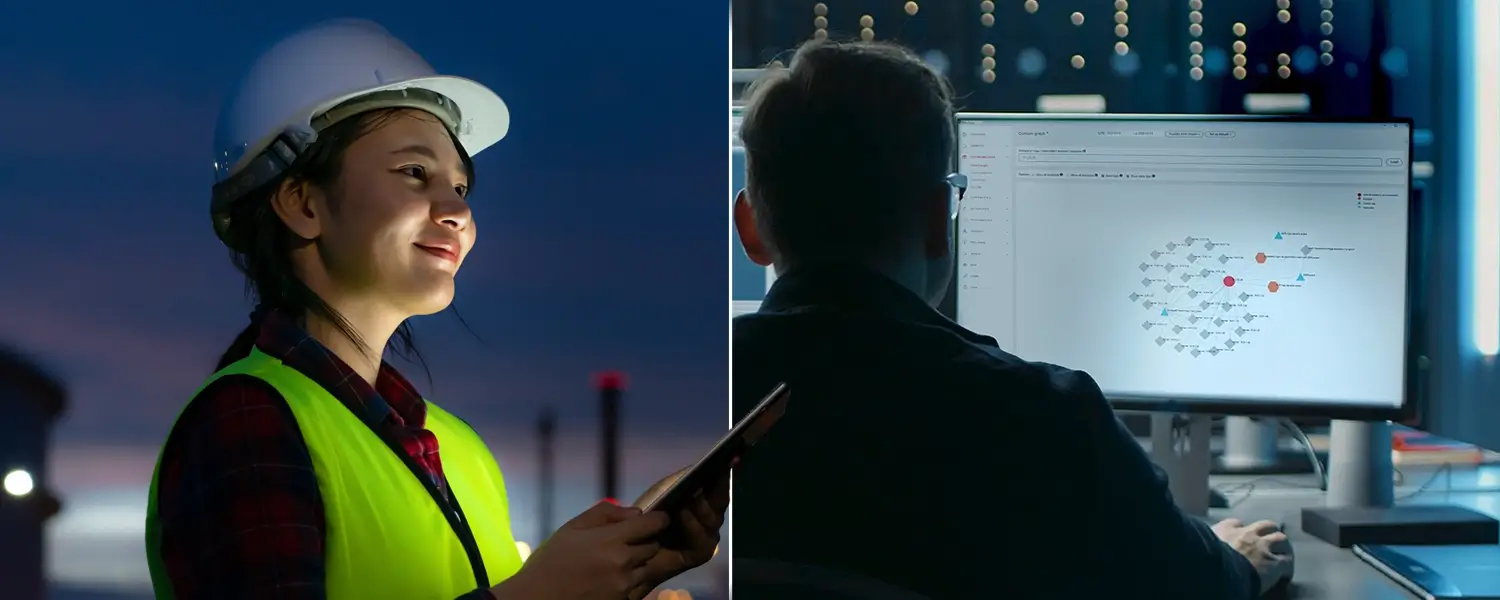

NDR continuously monitors network traffic, endpoints and other data sources to identify potentially suspicious or malicious activity, collecting and aggregating data from various sources, such as network devices, servers, applications and endpoints. This data includes network logs (NetFlow, IPFIX, firewall logs) as well as other communication logs, events and alerts or connections generated by the system or triggered by internal servers. The collected data is normalized and enriched by the NDR to ensure that it is in a consistent format and contains as much context as possible. Enrichment includes the addition of metadata, asset details, user information and the possible impact of the event, e.g., source and destination. This enables even more comprehensive monitoring of possible anomalies, contextual information about network traffic and user behavior.

Its benefits for your organization

The added value of NDR for risk-based alerting, besides its continuous monitoring capabilities, is the implementation of machine learning: it facilitates risk-based alerting by using advanced analytics, contextual information, threat data and behavioral analysis to assess the potential risk associated with the detected events on the different network areas. NDR solutions can trigger events differently weighted and can respond to incidents based on the risk assessments, e.g. in isolating vulnerable endpoints or blocking malicious traffic.

Enhanced threat priorization

NDR solutions use machine learning and contextual intelligence to assess and prioritize threats based on their risk levels, helping security teams focus on the most critical incidents first.

Behavior-based threat detection

Machine learning algorithms detect deviations from normal network behavior, identifying sophisticated attacks that traditional signature-based methods might miss.

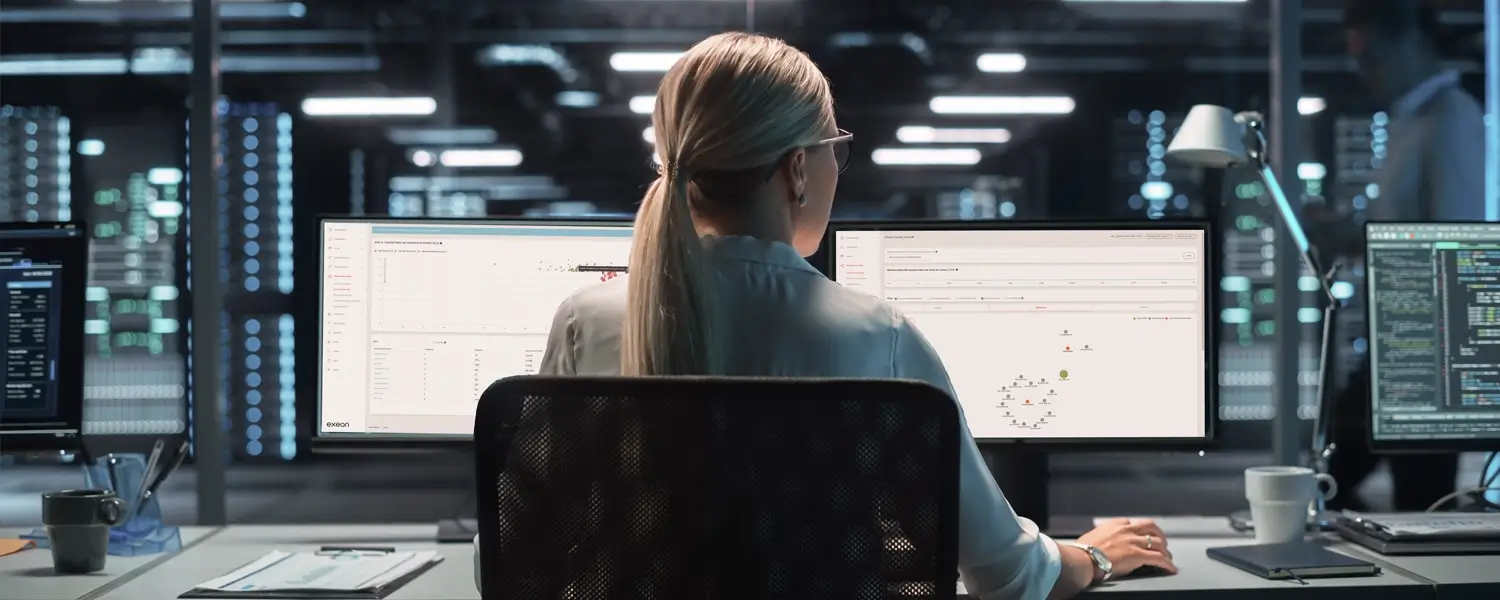

Reduced alert fatigue

By distinguishing between routine events and high-risk anomalies, NDR reduces unnecessary alerts, allowing analysts to concentrate on genuine threats and improving response efficiency.

Customizable risk scoring

Organizations can configure "risk boosting" factors to assign different threat weights to specific networks or assets, enabling more precise risk-based alerting and response strategies.

Automated incident response

NDR can automatically respond to high-risk threats, such as isolating vulnerable endpoints or blocking malicious traffic, minimizing the time attackers have to exploit vulnerabilities.

Comprehensive network visibility

By continuously monitoring network traffic, endpoints, and communication logs, NDR provides deep insights into potential security threats across the entire IT environment.

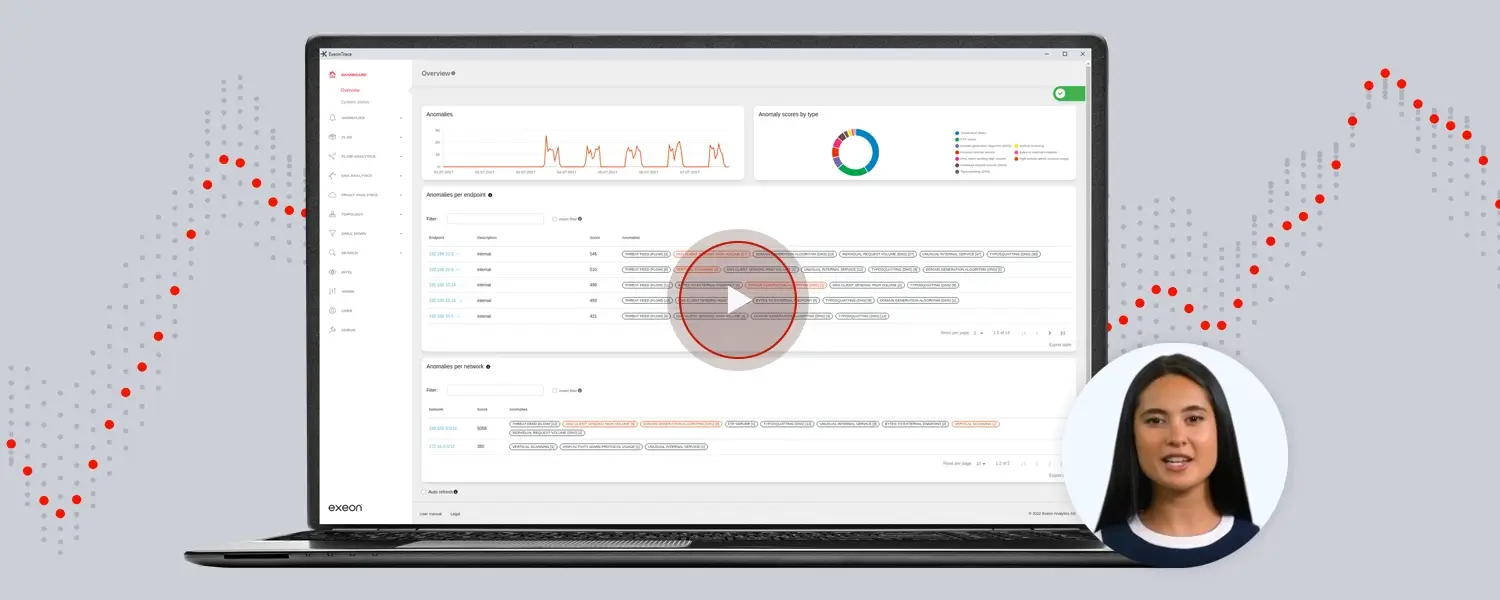

What if you could dramatically reduce your false alerts, save time and costs?

Insights from our security experts

Cut through the cyber noise: blogs written by our thought leaders in AI-powered cybersecurity.